This weekend I was at HackGT here in Atlanta. As much as I enjoy traveling across the country to go to a hackathon, they’re just as fun when they’re in your own backyard.

The Project

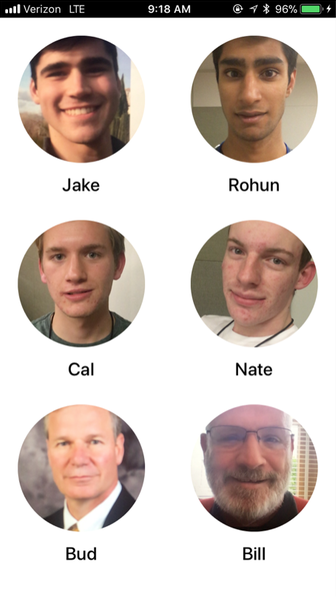

We built an app called Nametag AR that detects and remembers the names of people you meet. The app listens in the background for when people introduce themselves to you. When it detects an introduction (like “Hi, I’m Jake”), it saves the person’s name and an image of their face.

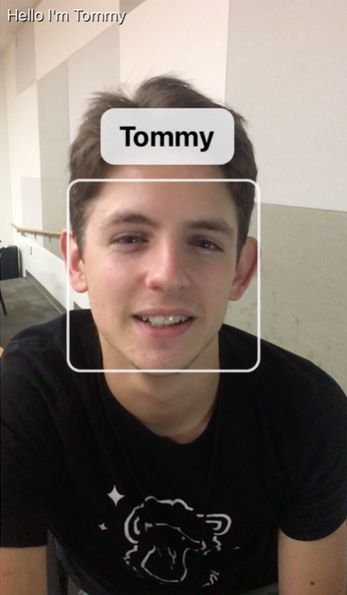

Once you’ve met somebody, Nametag AR will remember them for you. Whenever you see them again in the future, the app will overlay their name near their face so you don’t forget who they are.

We implemented the app using a few different core technologies. We used Apple’s Speech framework to continuously listen for the “Hi, I’m ___” keyword. Simultaneously, we use Apple’s Vision framework to identify individual faces in the video feed and crop them in to standalone images. We combined these two inputs to built a database of known faces, with a name matched to an image.

When the camera is pointed at a face, the app makes use of Microsoft Azure’s Face API to compare the unknown face to the library of faces inside the app so far. If there’s a match, the name is shown in augmented reality above the person’s face.

Pivoting to Azure

We were originally using the Python openface tool to convert faces into higher-dimentional vector representations. Theoretically, you can find the similarity of two faces by taking the euclidian distance of their vectors. In practice, though, we found this system very unreliable and noisy. The tool also suffered from long processing times, which hampered the augmented reality experience.

With about eight hours left in the hackathon, we pivoted away from openface and started using Microsoft Azure’s Face API instead. We’re really happy with this change, because it really increased the performance and the accuracy of our facial match system. It was pretty intimidating to make such a fundamental change so late in the game, though. I was impressed we pulled it off (we only got about half an hour of sleep that night).

Video Demo

The Team

I was originally planning on working with a four-person team, but some registration woes whittled us down to just two people. I wasn’t complaining, though – I really enjoy working with Nate. This was our third hackathon together. Most recently, we won 2nd place at HackCU in Boulder, CO for building SiriQuery.

On a short tangent, I personally find working on large teams (4+ people) at hackathons especially difficult. When all four people have different skill sets, development environments, and interests, it’s very difficult to come up with a project that gives everybody something to work on that’s both interesting to them and well-scoped to their skill level. I think two or three people is the ideal size for a team that can perform really well together.